June 2025

Up until a few years ago nobody would expect computer vision systems to be able to talk. Up until then they would be buried in systems with limited capabilities and transparency. Recently, we are experimenting with so-called Vision Language Models. Researchers’ ambition is to integrate the vision and language skills of AI systems towards a multimodal artificial intelligence. VLMs in the future could greatly benefit many applications in domains ranging from medicine, to education to manufacturing.

We are still in the early stages of developing these types of models and figuring out how to go beyond wowing demos, unconvincing deep learning architectures and embarrassing limitations.

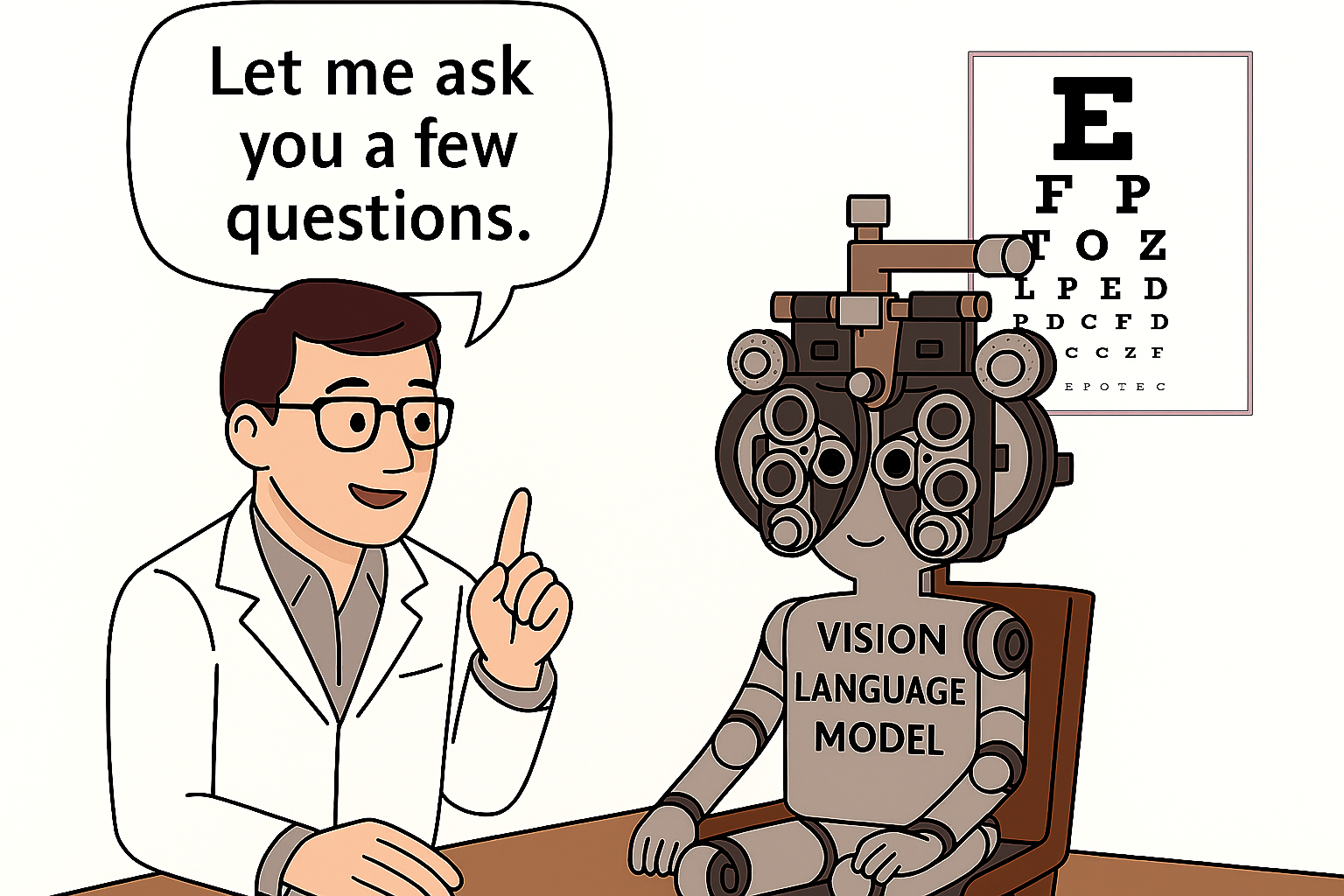

We believe that first and foremost we should start asking the right questions to VLMs in a completely controlled framework, similar to what happens during an eye doctor’s tests. The framework should provide the appropriate methodological tools to design a comprehensive set of stimuli and responses without resorting to human annotation of biased and noisy data sets. This framework should then be able to enable the acquisition of error signals so that we can both assess the goodness of architectures and improve the machine learning models and performances. Last but not least, this framework should allow us to explore different types of cognitive tasks of increasing complexity, from classification to reasoning and beyond the visual scene.

In search of this new approach to design, train and evaluate VLMs we have proposed CIVET and reviewed the abilities of state-of-the-art VLMs. CIVET promotes a novel investigative methodology to estimate and improve the performance of VLM applicable to diverse cognitive tasks. In the paper you will also find a review of the current VLM architectures and a novel approach to assess them exhaustively and insights in how to go beyond state-of-the-art.

Paper: https://arxiv.org/pdf/2506.05146?